很久没有写爬虫了,大概率都忘记怎么写了,这不,又来翻墓志铭,来写爬虫了!

同样的,一个比较简单的网站,爬取图片资源,采用了多线程的爬取,如果爬虫想要进精,就业,那么一定要往JS逆向反爬发展,还有就是安卓移动端数据的爬取。

相比于php,还是更喜欢python,毕竟抄了那么久,白混了那么多时间,一定程度喜欢编码的原因大概率是可以随心所欲的修改,按照自己的想法,而且能够达到一定的效果,或者说,向往自由!

言归正传!

先分析网页

首页

分页

详情页

整体结构清晰明了,还是比较简单,主要是没有反爬限制,当然访问过于频繁,还是会443的!

还有就是部分类目页面存在不一样的xpath节点,这可以基于判断来实现不同节点的处理。

关键代码片段

由于很久没写爬虫了,这里直接重温了类的写法来实现这样一个简单的爬虫,可能代码还是比较啰嗦,不够优雅!

多线程的实现两种方式

方式一:

def thread_imgs(self,imgs):threadings=[]for img in imgs:t=threading.Thread(target=self.down_img,args=(img,))threadings.append(t)t.start()for x in threadings:x.join()print("多线程下载图片完成!")方式二:

def get_imgs(self,imgs):try:# 开4个 worker,没有参数时默认是 cpu 的核心数pool = ThreadPool()results = pool.map(self.down_img, imgs)pool.close()pool.join()print("采集所有图片完成!")except Exception as e:print(f"错误代码:{e}")print("Error: unable to start thread")print("多线程下载图片完成!")详情页内容的获取及处理

def get_detail(self,href):response = self.get_response(href, 6)html = response.content.decode('utf-8')tree = etree.HTML(html)h1=tree.xpath('//h1[@class="page-title-text"]/text()')[0]pattern = r"[\/\\\:\*\?\"\<\>\|]"title = re.sub(pattern, "_", h1) # 替换为下划线path = f'{title}/'self.path =pathos.makedirs(self.path,exist_ok=True)print(title)infos=tree.xpath('//dl[@class="details-list b"]//text()')info=''.join(infos)if info=='':infos = tree.xpath('//main[@class="product-content"]//text()')info = ''.join(infos)print(info)with open(f'{self.path}{title}.txt','w',encoding='utf-8') as f:f.write(f'{title}\n{info}')imgs=tree.xpath('//section[@class="gallery"]//img/@src')# print(len(imgs))if imgs==[]:imgs = tree.xpath('//section[@class="gallery border"]//img/@src')print(imgs)# self.thread_imgs(imgs)self.get_imgs(imgs)

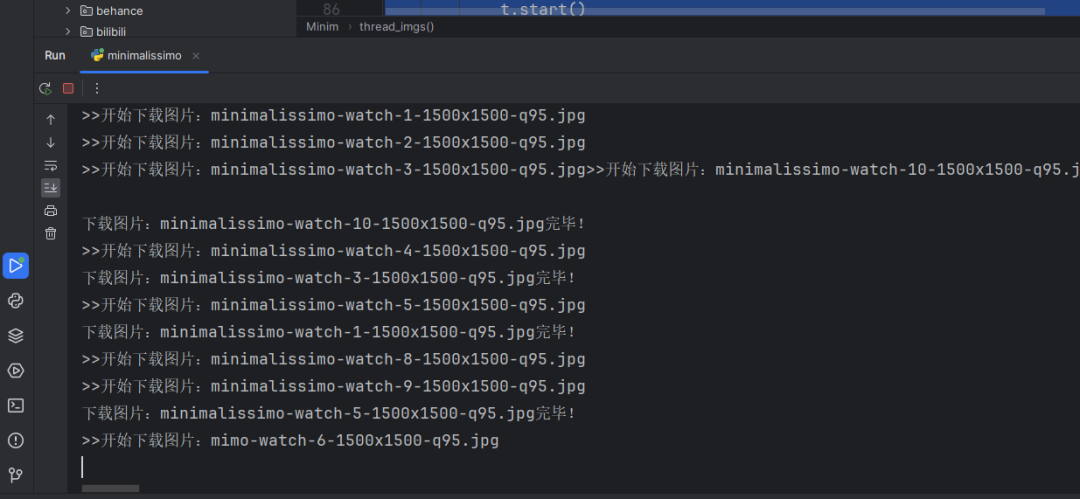

运行效果

附完整源码供参考:

# minimalissimo 网站数据采集

# 20240726 by 公众号:Python与SEO学习

# https://minimalissimo.com/page:2

# -*- coding: UTF-8 -*-import requests

import os

from lxml import etree

import time

import random

import re

import threading

from multiprocessing.dummy import Pool as ThreadPoolclass Minim(object):def __init__(self):self.ua_list = ['Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36Chrome 17.0','Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11','Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0Firefox 4.0.1','Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1','Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50','Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50','Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11',]self.pagenum=108self.url="https://minimalissimo.com"self.path=Nonedef get_response(self,url,time):ua = random.choice(self.ua_list)headers={'User-Agent':ua,}response=requests.get(url=url,headers=headers,timeout=time)return responsedef get_urls(self,page):url=f'{self.url}/page:{page}'response=self.get_response(url,6)html=response.content.decode('utf-8')tree=etree.HTML(html)hrefs=tree.xpath('//article[@class="thumb b"]/a[@class="thumb-content"]/@href')# print(len(hrefs))print(hrefs)return hrefsdef get_detail(self,href):response = self.get_response(href, 6)html = response.content.decode('utf-8')tree = etree.HTML(html)h1=tree.xpath('//h1[@class="page-title-text"]/text()')[0]pattern = r"[\/\\\:\*\?\"\<\>\|]"title = re.sub(pattern, "_", h1) # 替换为下划线path = f'{title}/'self.path =pathos.makedirs(self.path,exist_ok=True)print(title)infos=tree.xpath('//dl[@class="details-list b"]//text()')info=''.join(infos)if info=='':infos = tree.xpath('//main[@class="product-content"]//text()')info = ''.join(infos)print(info)with open(f'{self.path}{title}.txt','w',encoding='utf-8') as f:f.write(f'{title}\n{info}')imgs=tree.xpath('//section[@class="gallery"]//img/@src')# print(len(imgs))if imgs==[]:imgs = tree.xpath('//section[@class="gallery border"]//img/@src')print(imgs)# self.thread_imgs(imgs)self.get_imgs(imgs)def thread_imgs(self,imgs):threadings=[]for img in imgs:t=threading.Thread(target=self.down_img,args=(img,))threadings.append(t)t.start()for x in threadings:x.join()print("多线程下载图片完成!")def get_imgs(self,imgs):try:# 开4个 worker,没有参数时默认是 cpu 的核心数pool = ThreadPool()results = pool.map(self.down_img, imgs)pool.close()pool.join()print("采集所有图片完成!")except Exception as e:print(f"错误代码:{e}")print("Error: unable to start thread")print("多线程下载图片完成!")def down_img(self,img):imgname=img.split('/')[-1]print(f">>开始下载图片:{imgname}")r=self.get_response(img,6)with open(f'{self.path}{imgname}','wb') as f:f.write(r.content)print(f"下载图片:{imgname}完毕!")def main(self):for page in range(1,self.pagenum+1):print(f">>正在爬取第{page}页列表页数据内容..")hrefs=self.get_urls(page)for href in hrefs:print(f">>正在爬取详情页{href}数据内容..")self.get_detail(href)print(f">>爬取详情页{href}数据内容成功!")time.sleep(8)print(f">>爬取第{page}页列表页内容成功!")time.sleep(6)if __name__=='__main__':spider=Minim()spider.main()·················END·················

你好,我是二大爷,

革命老区外出进城务工人员,

互联网非早期非专业站长,

喜好python,写作,阅读,英语

不入流程序,自媒体,seo . . .

公众号不挣钱,交个网友。

读者交流群已建立,找到我备注 “交流”,即可获得加入我们~

听说点 “在看” 的都变得更好看呐~

关注关注二大爷呗~给你分享python,写作,阅读的内容噢~

扫一扫下方二维码即可关注我噢~

关注我的都变秃了

说错了,都变强了!

不信你试试

扫码关注最新动态

公众号ID:eryeji