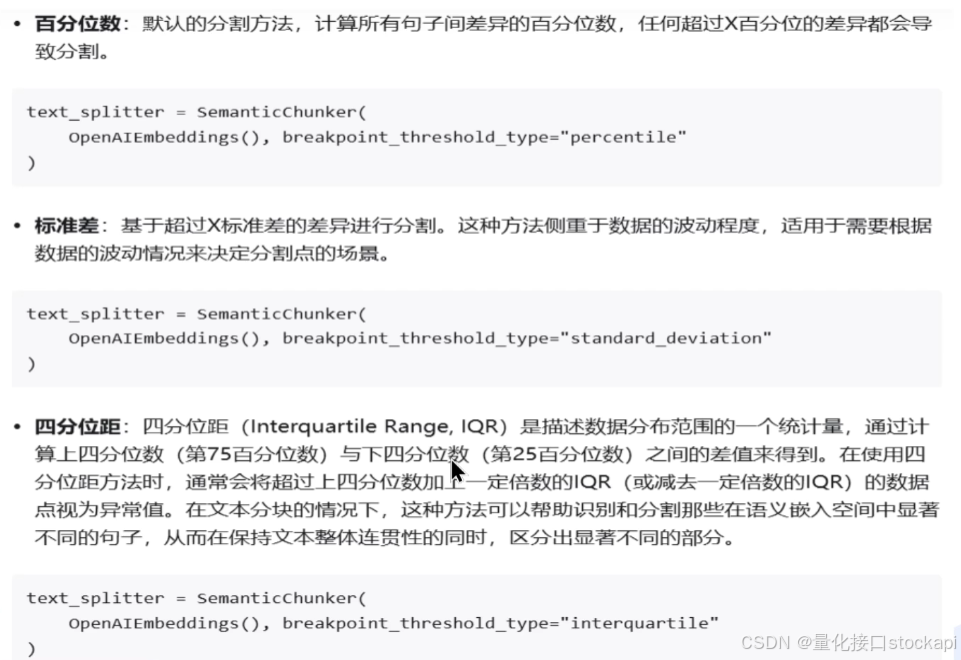

切割方法:

import osfrom langchain_community.embeddings import BaichuanTextEmbeddings

from langchain_experimental.text_splitter import SemanticChunkerwith open('test.txt', encoding='utf8') as f:text_data = f.read()os.environ['BAICHUAN_API_KEY'] = 'sk-732b2b80be7bd800cb3a1dbc330722b4'

# 导入百川大模型的词嵌入模型

# 百川官网,自己注册一个账号,生成apikey:https://platform.baichuan-ai.com/homePage

embeddings = BaichuanTextEmbeddings()

# 按照百分位percentile切割

text_splitter = SemanticChunker(embeddings, breakpoint_threshold_type='percentile')docs_list = text_splitter.create_documents([text_data])print(docs_list[0].page_content)