此版本的NFS为单点,仅为练习使用,生产环境建议使用cephfs的卷类型,避免单点。或者通过keepalived加Sersync的方案对NFS作容灾处理即可用于生产环境。当然,对于开发或测试环境,方便起见,直接使用单点的NFS加mongodb statefulSet方案是最为清晰简便的。

mongodb集群部署分两种情况,一是只部署副本机制,不使用分片,另一种情况是使用分片集群。使用分片的情况下会略复杂,但基本部署方法和只部署副本的方法是差不多的,只不过是多了一些角色。这里只介绍部署副本集群到k8s集群。

部署前提:一个高可用k8s集群和NFS storage class。

部署服务器规划

| 主机名 | 业务概述 |

|---|---|

| k8s-register-node | harbor私服 |

| lb-node-1 | nginx负载、helm |

| lb-node-2 | nginx负载 |

| k8s-master-1 | k8s master(controlplane,worker,etcd) |

| k8s-master-2 | k8s master(controlplane,worker,etcd) |

| k8s-master-3 | k8s master(controlplane,worker,etcd) |

| k8s-storage-3 | NFS-Server |

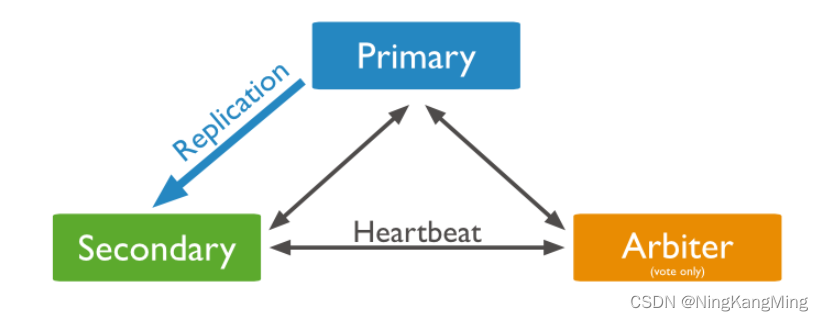

部署一主一从一仲裁的集群,可以看出,因为NFS服务只有一个,因此这里是存在单点故障的,除此单点外,mongodb本身的服务具有高可用,副本机制如下图:

如上图,共部署三个副本,其中有一个仲裁节点,此节点并不保存数据。主节点负责读写,从节点通过Raft协议同步数据, 可作读节点。

如上图,共部署三个副本,其中有一个仲裁节点,此节点并不保存数据。主节点负责读写,从节点通过Raft协议同步数据, 可作读节点。

准备keyFile

mongodb副本集必须有3个以上成员,且成员个数必须为奇数。主备节点存储数据,仲裁节点不存储数据。客户端同时连接主节点与备节点,不连接仲裁节点。 仲裁节点是一种特殊的节点,它本身并不存储数据,主要的作用是决定哪一个备节点在主节点挂掉之后提升为主节点,所以客户端不需要连接此节点。

每个服务器都必须有keyFile文件,且该keyFile文件必须相同,各服务器才能正常交互。

openssl rand -base64 756 > /home/kmning/mongodb/mongodb.keystatefulSet定义

PV我们直接使用NFS storage class的动态制备,因此我们定义好statefulset和Service就可以把mongodb集群安装好,最后手动初始化集群即可。

下面的kefile内容即是上面生成的mongodb.key对应的内容。

mongodb-statefulset.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:name: mongodb-rs-cm

data:keyfile: |上面生成的keyfile内容mongod_rs.conf: |+systemLog:destination: filelogAppend: truepath: /data/mongod.logstorage:dbPath: /datajournal:enabled: truedirectoryPerDB: truewiredTiger:engineConfig:cacheSizeGB: 4directoryForIndexes: trueprocessManagement:fork: truetimeZoneInfo: /usr/share/zoneinfopidFilePath: /data/mongod.pidnet:port: 27017bindIp: 0.0.0.0maxIncomingConnections: 5000security:keyFile: /data/configdb/keyfileauthorization: enabledreplication:oplogSizeMB: 5120replSetName: xxxReplSet

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mongodb-rs

spec:serviceName: mongodb-rsreplicas: 3selector:matchLabels:app: mongodb-rstemplate:metadata:labels:app: mongodb-rsspec:containers:- name: mongoimage: xxx.com:443/mongo:4.4.10ports:- containerPort: 27017name: mongo-pod-portcommand: ["sh"]args:- "-c"- |set -exmongod --config /data/configdb/mongod_rs.confsleep infinity env:- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPvolumeMounts:- name: confmountPath: /data/configdbreadOnly: false- name: datamountPath: /datareadOnly: falsevolumes:- name: confconfigMap:name: mongodb-rs-cmdefaultMode: 0600volumeClaimTemplates:- metadata:name: dataspec:accessModes: ["ReadWriteOnce"]resources:requests:storage: 300GistorageClassName: managed-nfs-storageconfigmap也一同建立,这里以默认的namespace进行的部署,实际情况因选择自己的namespace。 这里要注意,keyfile权限设置600否则无法启动mongod。 fork如果配置true的话,在启动命令后我写了sleep infinity,否则容器立马会退出。

注意上面,configmap相当于定义了一个keyfile文件和一个mongod_rs.conf配置文件,在mongod_rs.conf配置文件中直接使用keyfile,然后在StatefulSet中对副本模板定义时直接挂载了mongod_rs.conf中定义的数据目录和配置文件目录,这样一来k8s就可以利用这些配置文件和副本状态集模板定义构建StatefulSet,而存储的PV则交给了NFS的storage Class。

注意上面的keyfile内容定义,以一行的形式保存,不能有tab。

安装上面的statefuleSet

kmning@k8s-master-1:~/mongodb$ kubectl create -f mongodb-statefulset.yaml

configmap/mongodb-rs-cm created

statefulset.apps/mongodb-rs created查看状态

kmning@k8s-master-1:~/mongodb$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mongodb-rs-0 1/1 Running 0 4m26s

mongodb-rs-1 1/1 Running 0 4m1s

mongodb-rs-2 1/1 Running 0 3m35s可见,三个pod正在有序创建完成,再查看一下PV即可发现,三块PV和PVC由NFS的storage class给我们动态制备了。

kmning@k8s-master-1:~/mongodb$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-14d12757-c32e-4e26-857e-7e6fb22c637f 10Gi RWX Delete Bound default/data-nacos-0 managed-nfs-storage 6d6h

pvc-50a5d0e7-0ae1-41a3-ae43-29c8279da460 300Gi RWO Delete Bound default/data-mongodb-rs-2 managed-nfs-storage 3m6s

pvc-a213fa79-bf84-465d-b7ee-fe9a2713d329 300Gi RWO Delete Bound default/data-mongodb-rs-0 managed-nfs-storage 3m57s

pvc-b9629010-ce4b-4c04-83fb-ee2feae32040 300Gi RWO Delete Bound default/data-mongodb-rs-1 managed-nfs-storage 3m31s

pvc-bb1beed7-5303-4fea-9bc1-4b1c3f762b18 10Gi RWX Delete Bound default/data-nacos-2 managed-nfs-storage 6d6h

pvc-cb9de849-bfaa-4c42-9328-c90831336c68 10Gi RWX Delete Bound default/data-nacos-1 managed-nfs-storage 6d6h

kmning@k8s-master-1:~/mongodb$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-mongodb-rs-0 Bound pvc-a213fa79-bf84-465d-b7ee-fe9a2713d329 300Gi RWO managed-nfs-storage 3m54s

data-mongodb-rs-1 Bound pvc-b9629010-ce4b-4c04-83fb-ee2feae32040 300Gi RWO managed-nfs-storage 3m28s

data-mongodb-rs-2 Bound pvc-50a5d0e7-0ae1-41a3-ae43-29c8279da460 300Gi RWO managed-nfs-storage 3m2s

data-nacos-0 Bound pvc-14d12757-c32e-4e26-857e-7e6fb22c637f 10Gi RWX managed-nfs-storage 6d6h

data-nacos-1 Bound pvc-cb9de849-bfaa-4c42-9328-c90831336c68 10Gi RWX managed-nfs-storage 6d6h

data-nacos-2 Bound pvc-bb1beed7-5303-4fea-9bc1-4b1c3f762b18 10Gi RWX managed-nfs-storage 6d6hService暴露服务

上面已经成功创建了mongodb的statefulset对应的三个Pod,但目前外部尚不可访问,先创建一个ClusterIP类型的Service,用以达到被其他pod访问的目的。

mongod-svc.yml

apiVersion: v1

kind: Service

metadata:name: mongodb-rsnamespace: default

spec:ports:- port: 27017protocol: TCPtargetPort: mongo-pod-portselector:app: mongodb-rstype: ClusterIP注意上面的service name要和StatefulSet中定义的serviceName对应起来。另外 ,targetPort我使用了mongodb定义的port的名称,这样一来,即使后端的mongodb端口更换了,Service也不用修改。

创建

euht@k8s-master-1:~/mongodb$ kubectl create -f mongod-svc.yml

service/mongodb-rs created创建后k8s就给这个Service分配了集群IP,查看如

kmning@k8s-master-1:~/mongodb$ kubectl get svc mongodb-rs -o yaml

apiVersion: v1

kind: Service

metadata:creationTimestamp: "2023-04-27T02:20:58Z"name: mongodb-rsnamespace: defaultresourceVersion: "3746349"uid: 758812a2-9d5c-457f-ab91-264fafbf9998

spec:clusterIP: 10.43.149.68clusterIPs:- 10.43.149.68internalTrafficPolicy: ClusteripFamilies:- IPv4ipFamilyPolicy: SingleStackports:- port: 27017protocol: TCPtargetPort: mongo-pod-portselector:app: mongodb-rssessionAffinity: Nonetype: ClusterIP

status:loadBalancer: {}然后,在k8s集群内,我们都可以利用集群IP 10.43.232.149去访问到后端mongodb的pod。

kmning@k8s-master-1:~/mongodb$ kubectl describe svc mongodb-rs

Name: mongodb-rs

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=mongodb-rs

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.149.68

IPs: 10.43.149.68

Port: <unset> 27017/TCP

TargetPort: mongo-pod-port/TCP

Endpoints: 10.42.0.33:27017,10.42.2.36:27017,10.42.4.13:27017

Session Affinity: None

Events: <none>可以看到,这个集群IP后面拥有三个Endpoints,对应三个mongodb pod。

现在我们可以通过域名的方式来设置replica set,也不用关心pod会变化的ip地址了。 域名为:$(podname).$(service name).$(namespace).svc.cluster.local

可以进行一次验证,mongodb-rs-0通过域名连接mongodb-rs-2

kmning@k8s-master-1:~/mongodb$ kubectl exec -it mongodb-rs-0 -- mongo mongodb-rs-2.mongodb-rs.default.svc.cluster.local

MongoDB shell version v4.4.10

connecting to: mongodb://mongodb-rs-2.mongodb-rs.default.svc.cluster.local:27017/test?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("64c8e67e-50ca-480a-ab5d-473b291239b2") }

MongoDB server version: 4.4.10

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, seehttps://docs.mongodb.com/

Questions? Try the MongoDB Developer Community Forumshttps://community.mongodb.com

>可以看出,正常连接。事实上通过域名连接,k8s会先通过dns服务查找到集群IP,然后通过Service找到Pod对应的IP,然后再进行连接。后续mongodb的pod实例怎么变,此域名都不会变,这样一来用域名设置的有状态配置就不会因为pod的IP改变而变得不可用。

集群初始化

上面已经搭建好mongodb的各个副本,但它们之间尚未有联系,随便登录一个副本进行初始化以建立副本集。

可以登录任何的一个副本进行设置,注意host字段要使用服务域名,因为statefulset的pod的域名是稳定不会变的。

kubectl exec -it mongodb-rs-0 -- mongo初始化

use admin;

rs.initiate({_id: "yourReplSet",members: [{ _id : 0, host : "mongodb-rs-0.mongodb-rs.default.svc.cluster.local:27017",priority: 50 },{ _id : 1, host : "mongodb-rs-1.mongodb-rs.default.svc.cluster.local:27017" ,priority: 60},{ _id : 2, host : "mongodb-rs-2.mongodb-rs.default.svc.cluster.local:27017",arbiterOnly: true }]}

)查看状态

rs.status();

yourReplSet:SECONDARY> rs.status();

{"set" : "yourReplSet","date" : ISODate("2023-04-26T10:13:56.173Z"),"myState" : 2,"term" : NumberLong(2),"syncSourceHost" : "mongodb-rs-1.mongodb-rs.default.svc.cluster.local:27017","syncSourceId" : 1,"heartbeatIntervalMillis" : NumberLong(2000),"majorityVoteCount" : 2,"writeMajorityCount" : 2,"votingMembersCount" : 3,"writableVotingMembersCount" : 2,"optimes" : {"lastCommittedOpTime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"lastCommittedWallTime" : ISODate("2023-04-26T10:13:39.160Z"),"readConcernMajorityOpTime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"readConcernMajorityWallTime" : ISODate("2023-04-26T10:13:39.160Z"),"appliedOpTime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"durableOpTime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"lastAppliedWallTime" : ISODate("2023-04-26T10:13:39.160Z"),"lastDurableWallTime" : ISODate("2023-04-26T10:13:39.160Z")},"lastStableRecoveryTimestamp" : Timestamp(1682503991, 4),"electionParticipantMetrics" : {"votedForCandidate" : true,"electionTerm" : NumberLong(2),"lastVoteDate" : ISODate("2023-04-26T10:13:22.875Z"),"electionCandidateMemberId" : 1,"voteReason" : "","lastAppliedOpTimeAtElection" : {"ts" : Timestamp(1682503992, 1),"t" : NumberLong(1)},"maxAppliedOpTimeInSet" : {"ts" : Timestamp(1682503992, 1),"t" : NumberLong(1)},"priorityAtElection" : 50,"newTermStartDate" : ISODate("2023-04-26T10:13:09.158Z"),"newTermAppliedDate" : ISODate("2023-04-26T10:13:23.890Z")},"members" : [{"_id" : 0,"name" : "mongodb-rs-0.mongodb-rs.default.svc.cluster.local:27017","health" : 1,"state" : 2,"stateStr" : "SECONDARY","uptime" : 3246,"optime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"optimeDate" : ISODate("2023-04-26T10:13:39Z"),"syncSourceHost" : "mongodb-rs-1.mongodb-rs.default.svc.cluster.local:27017","syncSourceId" : 1,"infoMessage" : "","configVersion" : 1,"configTerm" : 2,"self" : true,"lastHeartbeatMessage" : ""},{"_id" : 1,"name" : "mongodb-rs-1.mongodb-rs.default.svc.cluster.local:27017","health" : 1,"state" : 1,"stateStr" : "PRIMARY","uptime" : 55,"optime" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"optimeDurable" : {"ts" : Timestamp(1682504019, 1),"t" : NumberLong(2)},"optimeDate" : ISODate("2023-04-26T10:13:39Z"),"optimeDurableDate" : ISODate("2023-04-26T10:13:39Z"),"lastHeartbeat" : ISODate("2023-04-26T10:13:55.921Z"),"lastHeartbeatRecv" : ISODate("2023-04-26T10:13:54.904Z"),"pingMs" : NumberLong(0),"lastHeartbeatMessage" : "","syncSourceHost" : "","syncSourceId" : -1,"infoMessage" : "","electionTime" : Timestamp(1682503992, 2),"electionDate" : ISODate("2023-04-26T10:13:12Z"),"configVersion" : 1,"configTerm" : 2},{"_id" : 2,"name" : "mongodb-rs-2.mongodb-rs.default.svc.cluster.local:27017","health" : 1,"state" : 7,"stateStr" : "ARBITER","uptime" : 55,"lastHeartbeat" : ISODate("2023-04-26T10:13:55.922Z"),"lastHeartbeatRecv" : ISODate("2023-04-26T10:13:54.932Z"),"pingMs" : NumberLong(0),"lastHeartbeatMessage" : "","syncSourceHost" : "","syncSourceId" : -1,"infoMessage" : "","configVersion" : 1,"configTerm" : 2}],"ok" : 1,"$clusterTime" : {"clusterTime" : Timestamp(1682504019, 1),"signature" : {"hash" : BinData(0,"KM4OLkjcMxpk7RqlTC2omtRQ0pY="),"keyId" : NumberLong("7226299616734478340")}},"operationTime" : Timestamp(1682504019, 1)

}可见,副本集群已成功在k8s上运行。